We present a novel adaptive multimodal fusion approach, based on a mixture of convolutional neural network (CNN) models that can be used in robotic applications, such as object detection or segmentation. Our approach has been developed for robots that have to operate in changing environments, which affects sensor noise. For example robots that have to operate in both, a

dark indoor and a bright outdoor scenario, during different times of

the day, or under different weather conditions in the case of

autonomous cars. The approach is being developed by members from the Autonomous Intelligent Systems group at the University of Freiburg, Germany.

Adaptive Multimodal Fusion for Object Detection

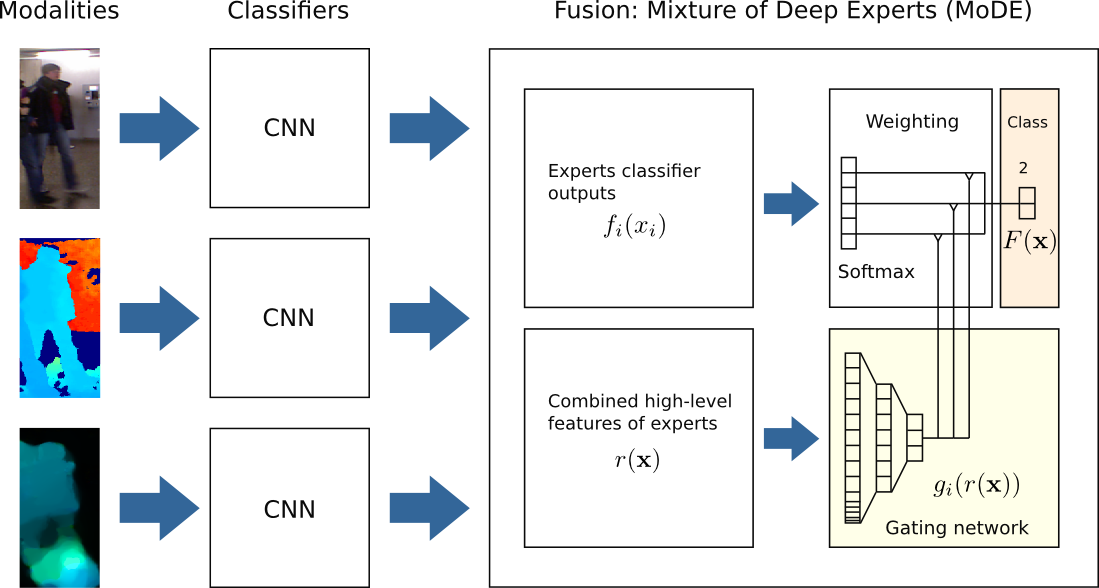

Our detection approach is based on a mixture of deep network experts

(MoDE) that are fused in an additional gating network. The single experts are a downsampled version of Google's inception architecture. The fusion is learned in a supervised way, where the

gating network is trained with a high-level feature representation of the

expert CNNs. Thus, for each input sample, the gating network assigns an adaptive weighting value to each expert to produce the final classification output. The weighting is done individually for each detected object in the frame. In our IROS16 paper, we show results for RGB-D people detection in changing environments, but the approach can be extended to more sensor modalities and different architectures.

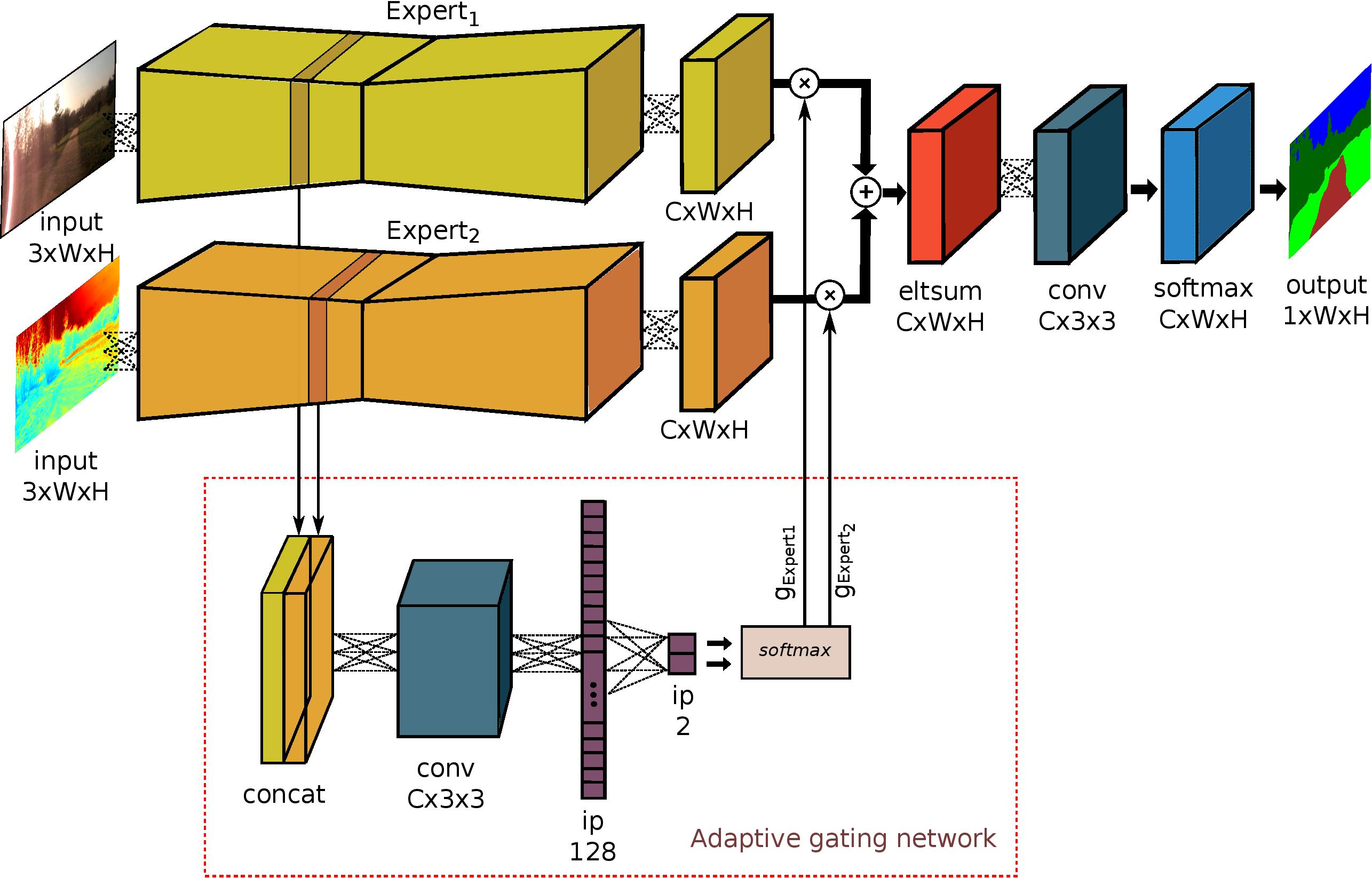

Our Mixture of Deep Experts approach can also be applied for semantic segmentation. In our ICRA17 paper and in the IROS16 workshop paper, a class-wise and expert-wise weighting is learned respectively. We show state-of-the-art segmentation results on a variety of datasets that contain diverse conditions including rain, summer, winter, dusk, fall, night and sunset. Thus, the approach enables a multistream

deep neural network architecture to learn features from

complementary modalities and spectra that are resilient to

commonly observed environmental disturbances.

Architecture of Mixture of Deep Network Experts For Semantic Segmentation

We refer to

deepscene.cs.uni-freiburg.de for a demo of the segmentation and the corresponding datasets.